Earnings Call Transcript

🎯 This data dump is based on my earnings call analysis project with BNY. The objective is to find features relevant for predictive modeling.

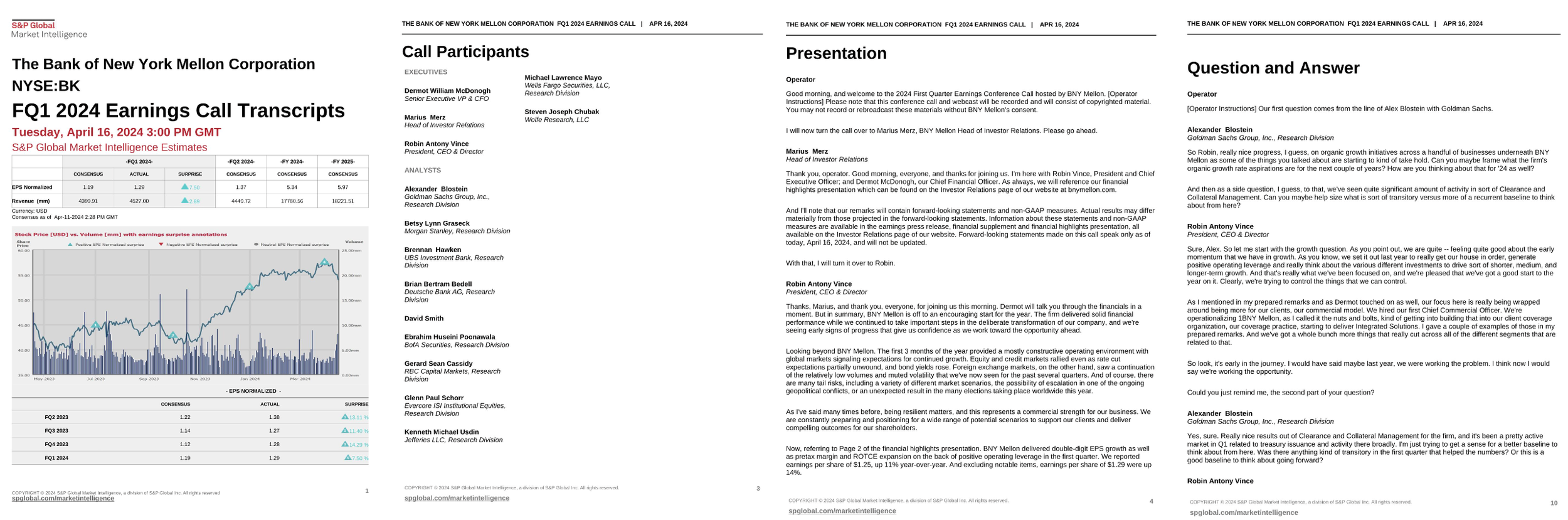

Data Overview

Fist page of each section of a transcript (typically ranges from 8 to 20 pages, depending on the company and length of the Q&A session.)

Earnings calls are scheduled teleconferences, usually held each fiscal quarter, where a public company’s executives discuss recently released financial results, provide forward-looking guidance, and field questions from sell-side analysts and investors. Mandated by market expectations and reinforced by SEC Regulation FD’s fair-disclosure rules, these sessions complement the formal 10-Q or 10-K filings by giving real-time color on performance drivers, strategic priorities, and risk factors, thereby ensuring all market participants receive simultaneous, transparent updates that can inform valuation and trading decisions. Earnings-call transcripts are rich, low-latency, and fundamentally narrative.

Typical structure: almost every call follows a fixed anatomy:

Safe-harbor & operator script

Management prepared remarks (CEO/CFO)

Q&A with sell-side analysts

Closing remarks & housekeeping

Vendors tag speakers, titles and timestamps; “Level-4” feeds even split the call into explicit prepared_remarks and questions_and_answers blocks.

Formats: Raw HTML/text (Seeking Alpha), PDF, machine-readable JSON/XML (S&P Global “Machine-Readable Transcripts”, FinancialModelingPrep, EarningsCall API).

Size: A single transcript is ≈7–15 kB in plain text; a decade of S&P 500 calls is ~40 GB compressed, but audio (≈30–60 MB MP3 per call) quickly pushes the footprint past 100 GB.

Latency Profile

Low-latency delivery matters: IC drift generated by language features decays noticeably after the first trading hour following the call (see Berkeley I-School study of next-day returns).

| Feed | First Bullets | Full Transcript | Audio |

|---|---|---|---|

| FactSet StreetAccount | < 60 s headline summaries (human–curated) | 10–15 min after call end (XML) | on demand |

| S&P Global MRI | — | ≈25 min median lag (2025 white-paper sample) | on request |

| EarningsCall API | transcript_ready=true flag usually flips within the hour of the scheduled call time |

same | MP3 via REST |

Data Processing Pipeline

This is an overview of what the pipeline could look like as part of a first-draft requirements sheet. Teams should refine based on tech stack and custom needs.

Ingest

Capture the JSON transcript feed and audio stream from vendors. Remove duplicate versions across providers.Pre-clean

Normalize text by removing boilerplate language, correcting encoding issues, and expanding contractions (e.g., "can't" → "cannot").Segmentation

Identify speakers and sections using vendor-provided tags. If tags are missing, apply regex rules (e.g., match "Operator:" lines) as a fallback.Manual QA (Spot-check)

Inspect a 0.5% random sample weekly. Maintain and update a mapping of speaker aliases for entity resolution.NLP/LLM Annotation

Apply sentence-level sentiment and subjectivity classification. Use FinBERT and GPT-4o for turn-level emotion tagging. Tag topics with BERTopic. Store vector embeddings in a Faiss index.Audio ML (Optional)

Extract voice-based metrics like mean pitch, jitter, and speech rate. Use openSMILE for vocal stress detection.Validation

Use a Retrieval-Augmented Generation (RAG) pipeline to cross-reference source text and reduce hallucinations (per Sarmah et al., 2023).Feature Store Dump

Save point-in-time features indexed bytickerandevent_timefor downstream modeling.

Features for Predictive Modeling

-

{

"ticker": "STT",

"event_time": "2024-01-19T08:30:00-05:00",

"call_metadata": {

"duration_minutes": 48,

"num_speakers": 7,

"num_analyst_questions": 13,

"is_virtual_event": true,

"is_guidance_provided": false

},

"sentiment_metrics": {

"prepared_remarks_sentiment": {

"positive": 0.62,

"negative": 0.23,

"neutral": 0.15

},

"qa_sentiment": {

"positive": 0.38,

"negative": 0.42,

"neutral": 0.20

},

"sentiment_by_topic": {

"bottom_line": {

"positive": 0.45,

"negative": 0.32,

"neutral": 0.23

},

"metrics": {

"positive": 0.51,

"negative": 0.28,

"neutral": 0.21

},

"operational_metrics": {

"positive": 0.58,

"negative": 0.22,

"neutral": 0.20

},

"foreign_exchange": {

"positive": 0.22,

"negative": 0.63,

"neutral": 0.15

}

},

"sentiment_slope_during_qa": -0.0043,

"sentiment_volatility": 0.12,

"qa_alignment_score": 0.78

},

"emotion_signals": {

"executive_emotions": {

"confidence": 0.29,

"positive_surprise": 0.06,

"excitement": 0.11,

"optimism": 0.18,

"skepticism": 0.09,

"curiosity": 0.04,

"confusion": 0.02,

"negative_surprise": 0.05,

"doubt": 0.03,

"concern": 0.08,

"frustration": 0.02,

"neutral": 0.12,

"acknowledgement": 0.08

},

"analyst_emotions": {

"confidence": 0.17,

"positive_surprise": 0.03,

"excitement": 0.02,

"optimism": 0.06,

"skepticism": 0.21,

"curiosity": 0.24,

"confusion": 0.06,

"negative_surprise": 0.08,

"doubt": 0.12,

"concern": 0.13,

"frustration": 0.06,

"neutral": 0.09,

"acknowledgement": 0.10

},

"emotion_by_financial_topic": {

"bottom_line": {

"confidence": 0.31,

"concern": 0.18,

"skepticism": 0.22

},

"metrics": {

"confidence": 0.36,

"neutral": 0.24,

"acknowledgement": 0.18

},

"adjustments": {

"skepticism": 0.29,

"curiosity": 0.31,

"confusion": 0.14

}

},

"speaker_emotion_matrix": {

"CEO": {

"confidence": 0.38,

"optimism": 0.26,

"concern": 0.11,

"curiosity": 0.05,

"acknowledgement": 0.12

},

"CFO": {

"confidence": 0.21,

"skepticism": 0.18,

"frustration": 0.04,

"neutral": 0.24,

"doubt": 0.09

}

},

"emotion_transitions": {

"confidence_to_concern": 0.12,

"optimism_to_neutral": 0.08,

"skepticism_to_confidence": 0.06

}

},

"qa_dynamics": {

"top_qa_topics": ["foreign_exchange", "operational_metrics", "bottom_line"],

"top_presentation_topics": ["net_interest_income", "global_economics", "forward_looking"],

"topic_emotion_mapping": {

"foreign_exchange": {

"dominant_emotion": "concern",

"secondary_emotion": "skepticism"

},

"bottom_line": {

"dominant_emotion": "confidence",

"secondary_emotion": "optimism"

}

},

"analyst_question_complexity": 0.47,

"executive_response_length_avg_sec": 52,

"question_followup_ratio": 0.12,

"nonresponse_rate": 0.13

},

"market_reaction": {

"stock_reaction_peak_delta_pct": -1.6,

"stock_reaction_lag_minutes": 3,

"volume_spike_ratio": 3.2,

"option_implied_vol_change": 0.08

}

}

Field Definitions & Grouped Explanations

1. Call Metadata

Fields that describe the basic properties and structure of the earnings call event.

ticker: Ticker symbol of the reporting company.

event_time: Timestamp when the earnings call began.

duration_minutes: Total length of the call.

num_speakers: Total number of unique speaker roles (execs, analysts).

num_analyst_questions: Number of distinct analyst questions asked.

is_virtual_event: Indicates if the event was held virtually.

is_guidance_provided: Boolean flag indicating whether forward-looking guidance was explicitly addressed.

2. Sentiment Metrics

Structured sentiment scores derived from prepared remarks and Q&A using NLP.

prepared_remarks_sentiment: Sentiment breakdown (% positive, negative, neutral) of the scripted portion.

qa_sentiment: Sentiment distribution (%) of Q&A based on real-time reaction and tone.

sentiment_by_topic: Financial-topic-specific sentiment (e.g., bottom line, FX).

sentiment_slope_during_qa: Change in sentiment over time during Q&A — useful for modeling fading/increasing confidence.

sentiment_volatility: Standard deviation of sentiment score across the call.

qa_alignment_score: Semantic alignment between analyst questions and exec responses using cosine similarity.

sentiment_deviation_from_guidance: Difference in sentiment tone vs. expected or previously issued guidance.

3. Emotion Signals

Fine-grained emotions extracted using emotion detection models; categorized by role, topic, and transitions.

Executive & Analyst Emotion Profiles

executive_emotions / analyst_emotions: Normalized ratios of 13 labeled emotions during the event:

confidence, positive_surprise, excitement, optimism

skepticism, curiosity, confusion, negative_surprise

doubt, concern, frustration, neutral, acknowledgement

Contextual Emotion Features

emotion_by_financial_topic: Maps sentiment/emotion levels to core financial topics (bottom line, metrics).

speaker_emotion_matrix: Emotion distribution by individual executive (e.g., CEO, CFO).

emotion_transitions: Proportion of notable emotion shifts across the call (e.g., confidence → concern).

4. Q&A Interaction Dynamics

Structural features characterizing the flow, responsiveness, and engagement maturity of the Q&A segment.

top_qa_topics: Most discussed financial subjects within analyst questions.

top_presentation_topics: Key topics emphasized in prepared remarks.

topic_emotion_mapping: Dominant emotions tied to each financial topic.

analyst_question_complexity: Relative complexity of analyst questions using cosine similarity & topic depth.

executive_response_length_avg_sec: Average duration of exec answers — proxy for clarity/detail.

question_followup_ratio: Share of questions that led to follow-ups — proxy for unanswered concerns or vagueness.

nonresponse_rate: % of vague responses, deferrals, or guidance avoidance by executives.

5. Market Reaction

Captures intraday or post-call stock and volatility movement tied directly to the earnings communication.

stock_reaction_peak_delta_pct: Max stock price movement (%) post-call (up or down).

stock_reaction_lag_minutes: Temporal lag (minutes) to max price reaction — reflects how quickly markets interpreted the information.

volume_spike_ratio: Ratio of post-EPS call trading volume to average volume.

option_implied_vol_change: Change in implied volatility (straddles, IV30) post-call — used in volatility-adjusted alpha models.

Alpha Hypotheses

This section outlines a set of hypotheses on how specific features from earnings calls may be predictive of short-term or long-term stock returns:

H1: Tone Divergence

When the prepared remarks sound overly positive but the Q&A session reveals a more negative or cautious tone, stocks tend to drop the next day.

▸ A Berkeley study supports this, showing that negative sentiment in the Q&A portion drives downward drift in price.H2: On-Topic Alignment

Companies that directly answer analyst questions—staying focused and relevant—tend to outperform.

▸ A backtest of 192,000 calls by S&P Global found this strategy could generate about 390 basis points per year in excess returns in a sector-neutral long-short portfolio.H3: Extreme Language

When executives use exaggeratedly positive words (e.g., “tremendous,” “unprecedented”), it often triggers short-term excitement.

▸ A study titled Hyperbole or Reality found a 1 percentage point increase in such words leads to a 6% spike in trading volume and a 0.63% return over 3 days.H4: Non-Responses (NORs)

Vague or evasive answers—where executives avoid addressing the question—correlate with higher uncertainty in the market.

▸ This behavior tends to widen bid-ask spreads and worsen post-earnings announcement drift (PEAD), as shown by research from Liang & Carrasco-Kind (2025).H5: Prosodic Stress

Vocal stress—like a sudden rise in pitch or faster speaking speed—especially from CFOs, often foreshadows bad news.

▸ Audio analysis shows these cues can precede negative guidance in the next quarter.

Risks and Mitigation

This part identifies the operational and compliance risks associated with using earnings call data for modeling, along with how to manage them:

Revision Lag

Vendors may update transcript data up to 24 hours after the call, which could compromise the time integrity of features.

▸ Mitigation: Save the exact version of the transcript at the time of processing, and log the vendor version ID to ensure reproducibility.Speaker Mis-Tags

Errors in labeling who is speaking (e.g., mixing up the CEO with the operator) can distort sentiment and emotion features.

▸ Mitigation: Use data from two vendors and apply majority-vote logic. Also use heuristics—like unexpected changes in speaker count—to detect errors.Audio Drop-Outs

Poor call quality can break prosody models that rely on clear voice signals.

▸ Mitigation: Use confidence scoring to flag unreliable audio. If needed, fall back to text-based features only.Regulation Fair Disclosure (Reg FD) & Compliance

There are legal risks with how transcripts are accessed and used, especially around redistribution or storage.

▸ Mitigation: Ensure access is tracked, restrict redistribution, and store hashed content. Enforce entitlement checks.Alpha Decay from Gaming

Once executives become aware of what models are measuring, they may change their behavior (e.g., using more positive words).

▸ Mitigation: Regularly refresh features and prioritize signals that are harder to manipulate, like evasiveness or Q&A alignment.